The request hedging pattern is introduced in the paper The Tail At Scale as a solution by Google to address the long tail effect in microservices. It is also one of the two retry modes in gRPC.

The hedging client sends the same request to multiple nodes, and once it receives the first result, it cancels the remaining in-flight requests. This pattern primarily aims to achieve predictable latency.

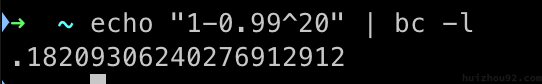

For instance, if our service’s call chain consists of 20 nodes, each with a P99 latency of 1 second, there is a statistically significant 18.2% chance that the request time will exceed 1 second.

Using the hedging pattern, we consistently obtain results from the fastest node, thus preventing unpredictable long tail latencies (service faults are not within the scope here).

In Golang, we can quickly implement the hedging request pattern using context. For example, in the code snippet below, we make five requests to the same backend service, but we only take the first one that returns:

|

|

You can find the complete code at: https://go.dev/play/p/fY9Lj_M7ZYE

The benefit of this approach is that we can mitigate long-tail latency in services and maintain the delays between services within a controllable range. However, directly implementing it can lead to multiple times the load, so careful design is necessary.

Why Does Long Tail Latency Occur?

There are several reasons for long tail latency, such as:

- Hybrid deployments have become mainstream, meaning many users compete for critical resources on the same physical machine, potentially leading to long-term effects due to resource scheduling.

- Garbage Collection (GC), which doesn’t require much explanation; Golang’s Stop-The-World (STW) can amplify long tail latency.

- Queuing, including message queues, network delays, etc.

Is there a way to avoid the request amplification caused by the hedging request pattern? The blog post Go High-Performance Programming EP7: Use singleflight To Merge The Same Request discusses how to use SingleFlight to merge identical requests. In this scenario, using SingleFlight can partially alleviate duplicate requests.

Another approach is to initially send only one request. If the P95 threshold does not respond, immediately request the second node. This method limits duplicate requests to about 5% and significantly reduces long-tail requests.

In the paper The Tail At Scale, several other methods are suggested to address long tail requests:

- Service differentiation and priority queues: Different service classes can be prioritized to schedule user requests over non-interactive requests. Short low-level queues allow faster execution of higher-level strategies.

- Reducing head-of-line blocking: Transforming time-consuming requests into more minor, more manageable requests is also a standard web performance enhancement method.

- Micro-partitioning: Fine-grained load adjustments to minimize the latency impact caused by uneven load distribution.

- Implementing circuit breakers for poorly performing machines.

- …

Do you have other great ways to deal with long-tail requests?