From the perspective of the Go compiler, memory allocation happens in two places: the stack and the heap. For most developers, this difference doesn’t matter much, as the Go compiler handles memory allocation automatically. However, from a performance standpoint, there is a significant difference between allocating memory on the stack versus the heap. If memory is allocated on the stack within a function, it is automatically reclaimed when the function completes. If it’s allocated on the heap, it’s managed by the garbage collector (GC), which involves a more complex process and can introduce performance overhead. This phenomenon of allocating memory on the heap when it could be on the stack is known as escape. To optimize performance, developers should minimize heap allocations.

This article was first published in the Medium MPP plan. If you are a Medium user, please follow me on Medium. Thank you very much.

Why Does Escape Happen?

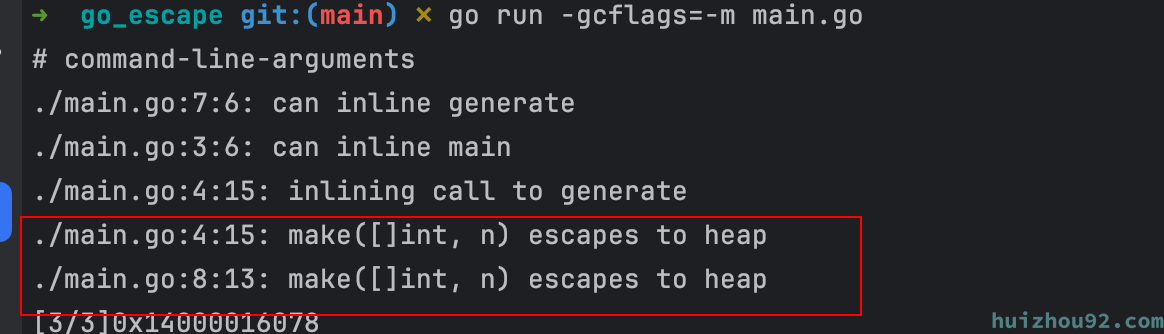

The primary reasons for memory escape are simple: either the compiler cannot determine the variable’s lifetime, or the stack does not have enough space for the required memory. The Go compiler uses escape analysis to decide whether a variable should be allocated on the stack or the heap. This process happens during the compilation stage and can be observed using the -gcflags=-m command to determine if a variable escapes to the heap.

Impact of Escape on Performance

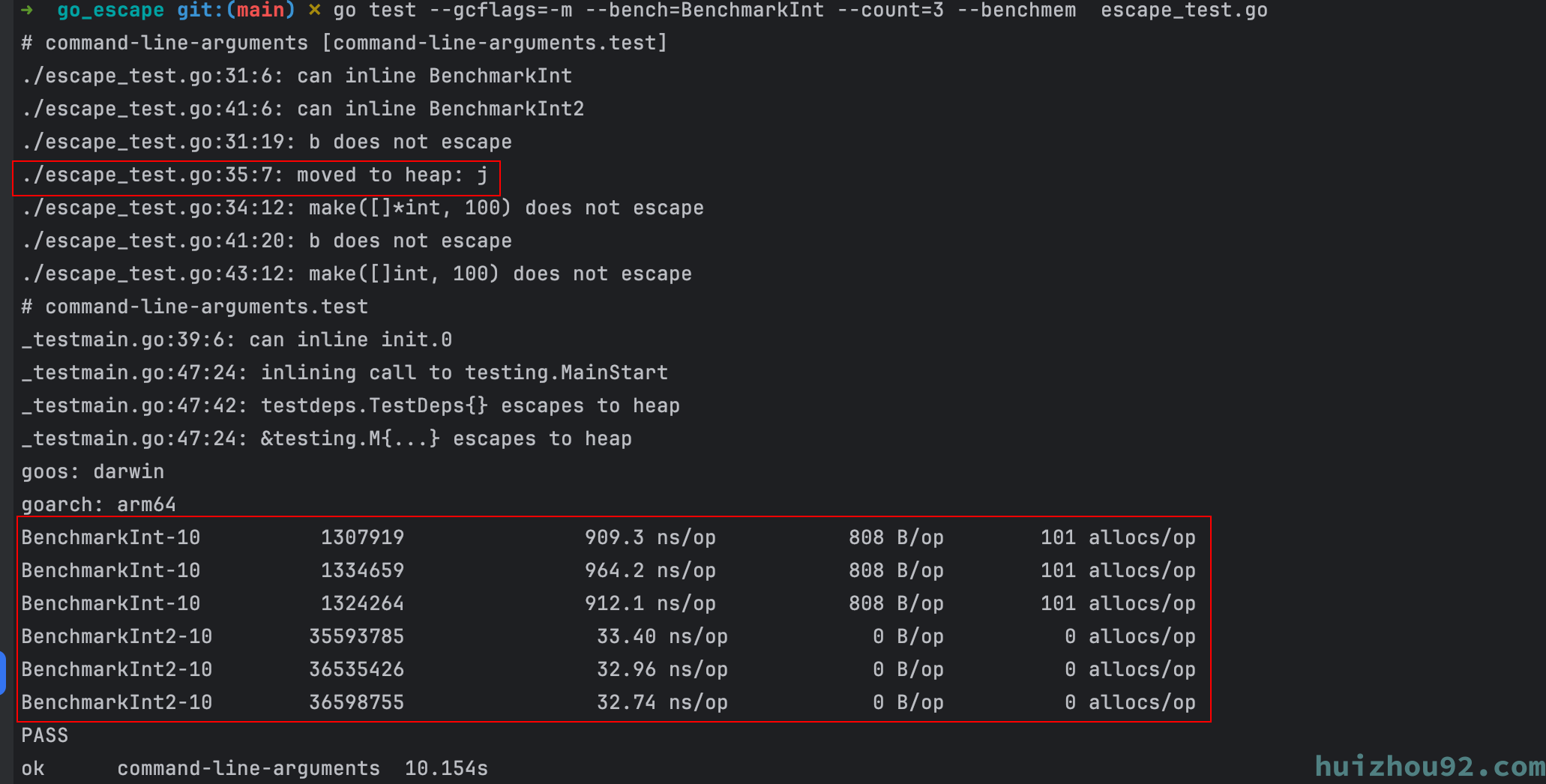

A straightforward benchmark test illustrates the impact of memory escaping. In the example below, BenchmarkInt uses a pointer array, causing escape with &j, whereas BenchmarkInt2 does not cause escape.

|

|

The results show that BenchmarkInt2 is 30 times faster than BenchmarkInt, with no memory allocations, whereas BenchmarkInt has 101 memory allocations. This test highlights the importance of conducting escape analysis.

Common Scenarios of Memory Escaping

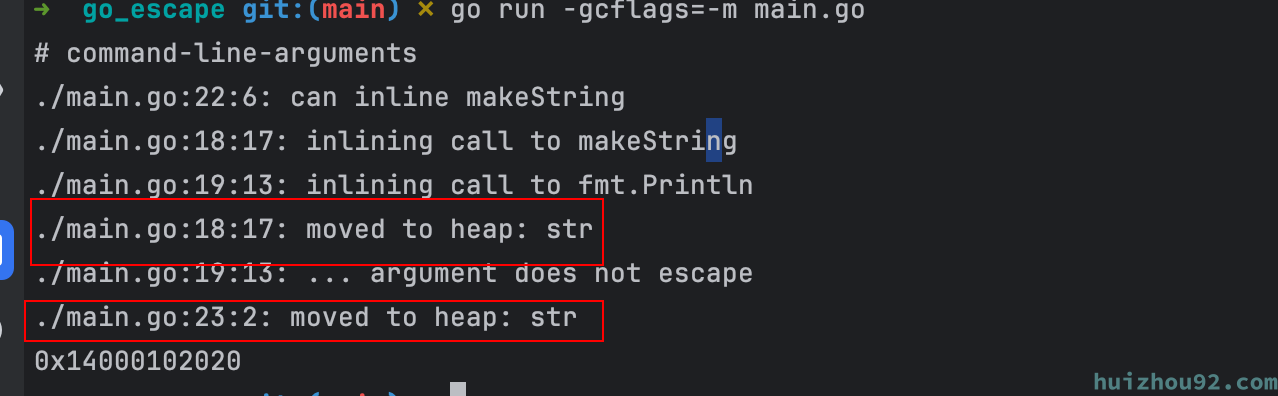

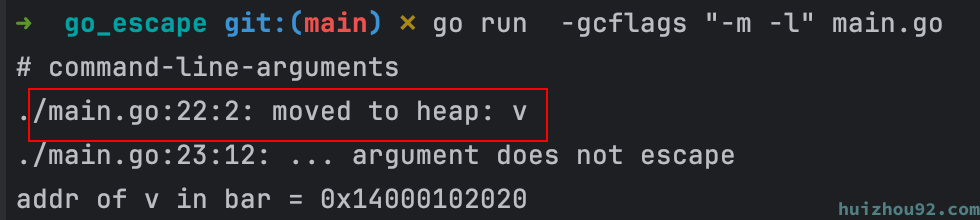

Variable and Pointer Escaping

When a variable’s lifecycle extends beyond the function scope, the compiler allocates it on the heap, leading to memory variable escape or pointer escape.

|

|

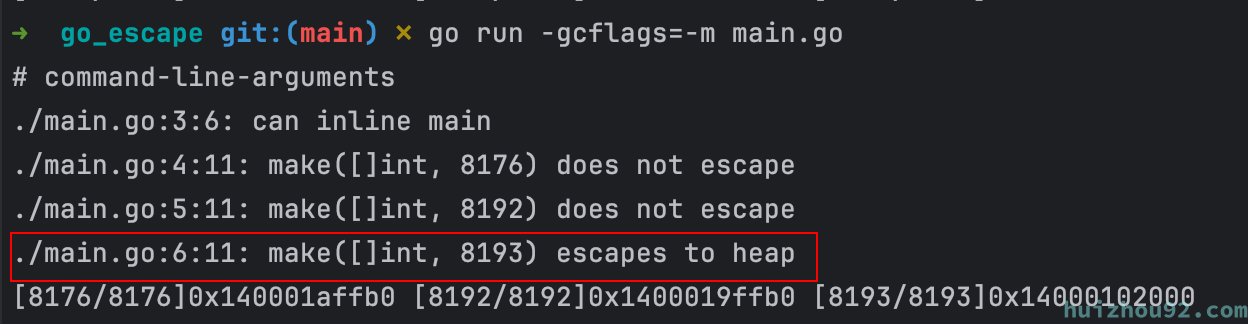

Stack Overflow

The operating system limits the stack size used by kernel threads; on a 64-bit system, this limit is typically 8 MB. You can check the maximum allowed stack size with the ulimit -a command. The Go runtime dynamically allocates stack space as needed for goroutines, starting with an initial size of 2 KB. If local variables exceed a certain size, typically 64 KB, they escape to the heap. For instance, attempting to create an array of 8193 bytes:

|

|

Arrays larger than 8192 bytes escape to the heap.

Variables with Unknown Size

|

|

Since the size of generate’s parameter is determined at runtime, the compiler cannot allocate it on the stack, causing it to escape to the heap.

interface{} Dynamic Type Escape

In Go, the empty interface interface{} can represent any type. If a function parameter is interface{}, the compiler cannot determine its type during compilation, leading to memory escape.

|

|

Since fmt.Printf accepts parameters of type any (equivalent to interface{}), v escapes to the heap.

Closure

In the following example, the function Increase() returns a closure that accesses the external variable n. Thus, n persists beyond the function’s scope, causing it to escape to the heap.

|

|

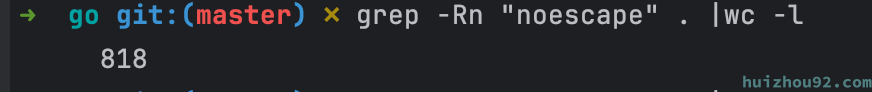

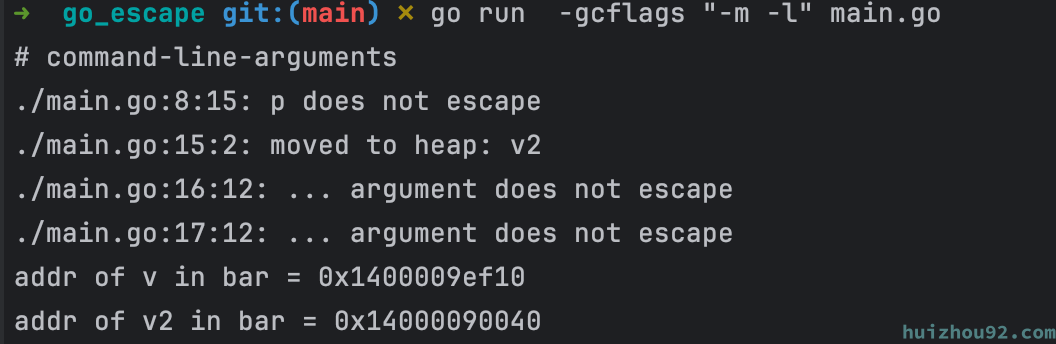

Manually Avoiding Escapes

In the interface{} Dynamic Type Escape example, memory escape occurs even when simply printing “Hello, World”. To prevent v from escaping, we can use the following function from the Go runtime code:

|

|

This function, widely used in the Go standard library and runtime, converts a pointer to a value and then back to a pointer, breaking the escape analysis data flow and preventing the pointer from escaping.

|

|

The output shows that v does not escape, while v2 does.

Conclusion

In typical development scenarios, memory escape analysis is rarely a concern. Based on experience, optimizing a lock can yield better performance improvements than conducting multiple memory escape analyses. During regular development, remember:

- Passing values copies the entire object, while passing a pointer only copies the pointer address, pointing to the same object. Passing a pointer can reduce value copying but may lead to memory allocation escaping to the heap, increasing the garbage collection (GC) burden. The GC overhead from passing pointers can significantly impact performance in frequent object creation and deletion scenarios.

Generally, passing a pointer to modify original object values or larger struct objects would be best. Passing values offer better performance for read-only, smaller struct objects.