BigCache[1] is a high-performance in-memory caching library designed for applications that demand high concurrency and low latency. This article explores BigCache’s performance optimization techniques, including its sharding mechanism, efficient hashing algorithms, usage of read-write locks, and more, with detailed explanations based on the source code.

Shard & Locking

From a user’s perspective, a cache functions like a large hashtable to store key-value pairs (k/v). Could this be achieved with map[string][]byte and sync.RWMutex?

Yes, but only for low-performance requirements. Although sync.RWMutex optimizes reads and writes, it serializes concurrent writes, even for different keys. This creates bottlenecks under high write concurrency, blocking multiple goroutines and allowing only one writer at a time.

BigCache addresses this by adopting a sharding mechanism inspired by Java’s ConcurrentMap. It divides a large hashtable into multiple smaller shards, each protected by its lock. This strategy, commonly used in high-concurrency scenarios like MongoDB’s sharding, effectively reduces contention. Golang also has a third-party implementation: concurrent-map[2].

Source Code Example: Sharding in BigCache

|

|

Each cache object calculates its hash based on its key: hash(key) % N. Ideally, concurrent requests are evenly distributed across shards, minimizing lock contention and reducing latency

Optimal Number of Shards (N)

Is a larger N always better? No. Excessive shards incur unnecessary overhead. It’s crucial to balance performance and cost by choosing a reasonable N, typically powers of 2 (e.g., 16, 32, 64). This allows modulo operations to use fast bitwise calculations:

|

|

Since for a power N of 2, for any x, the following equation holds.

x mod N = (x & (N - 1)).

So, you only need to use a bitwise AND (&) once to find its remainder.

Choosing the Right Hashing Algorithm

Computer scientists have invented many Hash algorithms, and gopher has implemented many. An ideal hashing algorithm should meet these criteria:

- High randomness in hash values.

- Fast computation.

- Minimal memory allocations to reduce garbage collection (GC) pressure.

BigCache’s default implementation uses the fnv64a algorithm, leveraging bitwise operations on the stack to avoid heap allocations.

|

|

Memory Optimization

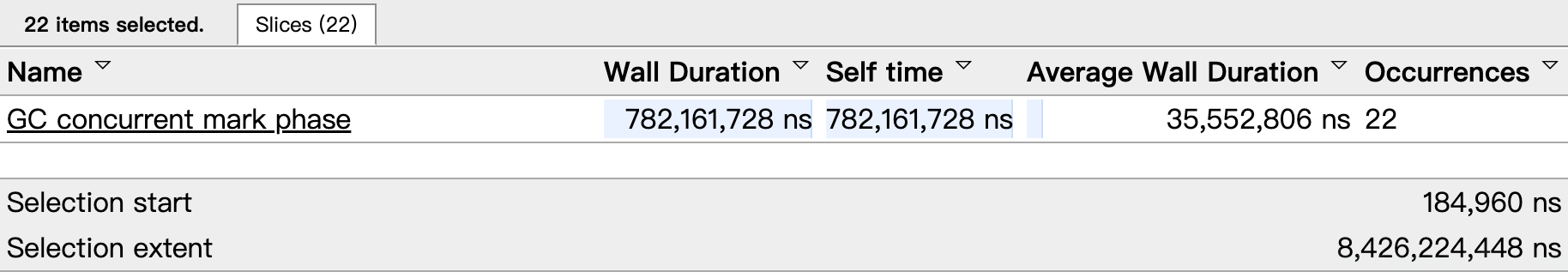

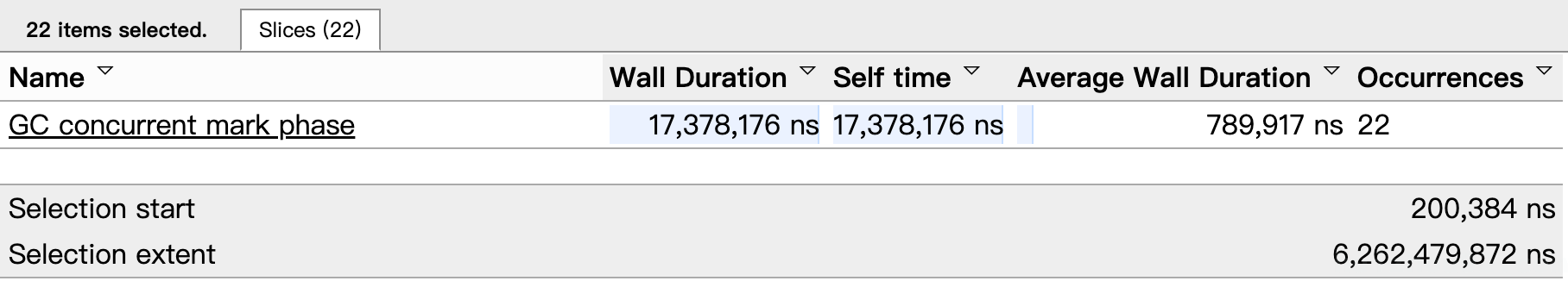

In Go, the garbage collector checks every element in the map during the mark and scan phases. If the cache contains millions of cached objects, the garbage collector’s pointless checking of these objects leads to unnecessary time overhead.

That’s because if the elements in the hashtable contain pointers, the GC scans every element that contains pointers at mark; if they don’t, they are skipped at the mark stage.

We can test this with a simple example.

|

|

Result

|

|

Then, run two tests separately to analyze the GC

|

|

The Wall Duration of NoPointMap is only 2% of PointMap. Although the concurrency of PointMap is small and there is no competition for a single goroutine, the garbage collection takes hundreds of milliseconds to mark and traverse in the mark/scan phase due to the large number of elements.

How does bigcache solve this problem? Disable your users from storing data with pointers on bigcache.

Just kidding, if you did, your users would abandon you. There are several ways to solve this problem.

- Refer to offheap[4] to use customized

Malloc()andFree()to manage memory manually while bypassing theruntimegarbage collection, but this can be more likely to lead to memory leaks. - The second way is to use freecache[5]. freecache implements map with zero GC overhead by reducing the number of pointers. it stores the keys and values in a

ringbufferand uses the index to look up the object.

The waybigcacheimplements this is to use the hash value as the key ofmap[int]int. Serialize the cached object into a pre-allocated large byte array, and then use its offset in the array as the value ofmap[int]int.

|

|

queue.BytesQueue is an array of bytes, on demand. When a []byte is added, it copies the data to the end.

When bigcache deletes a cached element, it just removes the index it was added from from map[uint64]uint32 and sets its data in the queue.BytesQueue queue to 0. The deletion operation creates a lot of " Empty holesand thesewormholeswill not be organized or removed. Because it is implemented using a byte array underneath, the removal of the "holes" is a time-consuming operation that causes locks to be held for too long.bigcache` can only wait for the cleanup mechanism to remove these “holes”.

Some other details:

- Cache objects in

bigcachedo not have the ability to refresh their expiration time. all caches will eventually expire. - The life cycle of all caches is configured by

config.LifeWindowand cannot be set for individual keys.